What we see here is the real user base of LLM. And 97% of them are free users.

It’s hardly a mystery why no AI company is remotely close to making a profit, ever.

Yup, I’m surprised the bottom hasn’t dropped out from these companies yet. It will be like the dot com crash in the early 2000s I’m guessing. And they’ll act so surprised…

They’re being artificially propped up by billionaires to use as a bludgeon against labor. Profit is less important to them than destroying upward mobility and punishing anyone who thinks about unionizing.

Wish more people caught on to this! The AI wave is not an economic boom and it is not motivated by any sort of consumer demand, it is very much a concerted push by industry to further impoverish the working class on several fronts (Monetary, mental, organizational, etc). That’s why it has continued flying in the face of all economic logic for the past several years.

Yes, like the other commenter replied, the Theils and Musks of the world want to go back to feudalism. That’s also why after years of making a big deal that interfering would tarnish the Washington Post, Bezos now seems to not care what happens to it’s reputation because he knows that there isn’t an alternative anymore.

The value isnt the product; that’s garbage.

It’s the politics of the product. Its the labor discipline, the ability to say ‘computer said so’. Why bomb hospital? Computer said so. Yes, i wanted to bomb hospital, but what i wanted didn’t factor in! i did it because computer (trained to say bomb hospital) said so!

Edit “

deusmachina vult!”Fuck no, it’ll be much worse than the dotcom bubble. If you want to be terrified, look how much of the stock market is NVIDIA and the big tech companies.

bottom can’t drop out if they never had a bottom

Sorry to break it to you, but AI does have uses, it’s just they are all evil.

Imagine things like identifying (with low accuracy) enemies from civilians in a war zone.

What is discussed in this thread are LLMs, which are a subgroup of AI. What you are referring to, is image recognition, and there are plenty of examples where their use is not evil, e.g. in the medical field.

Yeah exactly, ML is very powerful and can be very useful in niche areas.

LLMs have tainted good AI progress cause it made line go up.

I have a few friends who work have started calling their field “data analysis with computers”, because “AI” and “Machine Learning” sounds like “prompt engineer” nowadays.

That’s so sad

the horrid evils of checks notes telling you what species you’re most likely looking at

AI as a concept has many uses that are beneficial!

The beneficial uses are non-profit seeking uses, which are not the ones seen jammed into everything. Pattern matching is extremely helpful for science, engineering , music, and a ton of other specific purposes in controlled settings.

LLMs/chat bots implemented by profit seeking companies and vomited upon the masses have only evil purposes though.

Have we still learned nothing about enshittification? This implication of this graph is that there’s an entire generation of people being raised right now who won’t be able to do jack shit without depending on AI. These companies don’t need to be profitable right now, because once they’re completely entrenched in the workflows and thought processes of millions of people, they can charge whatever they want. Accuracy and usefulness are secondary to addiction and dependency. If you can afford to amass power and ubiquity first, all the profit you can imagine will come later.

I mean, I failed / dropped out of High school, I’m absolutely fine. I’m not worried for the kids tbh. Kids will always take the path of least resistance when it comes to schoolwork, just the path is now actually getting homework done by an ai instead of just guessing / skipping the assignment. I’m genuinely more worried for all the older generations who don’t realize that because of AI honor roll has 0 meaning now.

I mean, we have had solid proof that giving children homework is counterproductive for at least 2 decades now. Maybe this will be the final straw that actually makes us listen to the experts and just stop giving children homework

The benefits of homework depend on how old the kid is and how much homework they’re getting. Too much homework too early is either a wash or an overall negative, but homework as a concept does have benefits.

once they’re completely entrenched in the workflows and thought processes of millions of people, they can charge whatever they want

Except that those people won’t have jobs or money to pay for AI.

Fair, but social media shows that enshittification doesn’t have to result in them charging money. Advertising and control over the zeitgeist are plenty valuable. Even if people don’t have money to pay for AI, AI companies can use the enshittified AI to get people to spend their food stamps on slurry made by the highest bidder.

And even if companies have conglomerated into a technofeudal dystopia so advertisement is unnecessary, AI companies can use enshittified AI to make people feel confused and isolated when they try to think through political actions that would threaten the system but connected and empowered when they try to think through subjugating themselves or ‘resisting’ in an unproductive way.

This is why they report “annualized revenue” where they take their best month and multiply it by 12.

It doesn’t even have to be a calendar month, it can be the best 30 days in a row times 12. I’d love to be able to report my yearly income that way when applying to apartments, lmao.

Really? Damn, I learned something today. I guess I will report my “annualised” income to the tax office from now on.

Dear tax office, if you’re reading this, 1) hi, 2) this is a joke :)

I also read Ed Zitron’s newsletter!

Gonna be honest, I just listen to the podcast!

Reminder that the bullshit Cursor pulled is entirely legal…

Not that I have sources to link but last I read I thought the big two providers are making enough money to profit on just inference cost, but because of the obscene spending on human talent and compute for new model training they aren’t turning a profit. If they stopped developing new models they would likely be making money though.

And they are fleecing investors for billions, so big profit in that way lol

Midjourney reported a profit in 2022, and then never reported anything new.

Cursor recently made 1 month of mad profit, by first hiking the price in their product and holding the users basically hostage, and then they stopped offering their most succesful product because they couldnt afford to sell it at that price. They annualized that month, and now “make a profit”.

Basically, cursor let everyone drive a Ferrari for a hundred bucks a month. Then they said “sorry, it costs 500 a month”. And then said “actually, instead of Ferrari, here’s a Honda”. Then they subtracted the cost of the Honda from the price of the Ferrari, and called it a record profit

This is legal somehow

The companies that were rasing reasonable revenue compared to their costs (e.g. Cursor) were ones that were buying inference from OpenAI and Anthropic at enterprise rates and selling it to users at retail rates, but OpenAI and Anthropic raised their rates, so that cost was passed onto consumers, who stopped paying for Cursor etc. and now they’re haemorrhaging money.

The problem is that you do need to keep training models for this to make sense.

And you always need at least some human editorialization of models, otherwise the model will just say whatever, learn from itself and degrade over time. This cannot be done by other AIs, so for now you still need humans to make sure the AI models are actually getting useful information.

The problem with this, which many have already pointed out, is that it makes AIs just as unreliable as any traditional media. But if you don’t oversee their datasets at all and just allow them to learn from everything then they’re even more useless, basically just replicating social media bullshit, which nowadays is like at least 60% AI generated anyway.

So yeah, the current model is, not surprisingly, completely unsustainable.

The technology itself is great though. Imagine having an AI that you can easily train at home on 100s of different academic papers, and then run specific analyses or find patterns that would be too big for humans to see at first. Also imagine the impact to the medical field with early cancer detection or virus spreading patterns, or even DNA analysis for certain diseases.

It’s also super good if used for creative purposes (not for just generating pictures or music). So for example, AI makes it possible for you to sing a song, then sing the melody for every member of a choir, and fine tune all voices to make them unique. You can be your own choir, making a lot of cool production techniques more accessible.

I believe once the initial hype dies down, we stop seeing AI used as a cheap marketing tactic, and the bubble bursts, the real benefits of AI will become apparent, and hopefully we will learn to live with it without destroying each other lol.

The technology itself is great though. Imagine having an AI that you can easily train at home on 100s of different academic papers, and then run specific analyses or find patterns that would be too big for humans to see at first.

Imagine is the key word. I’ve actually tried to use LLMs to perform literature analyses in my field, and they’re total crap. They produce something that sounds true to someone not familiar with a field. But if you actually have some expert knowledge in a field, the LLM just completely falls apart. Imagine is all you can do, because LLMs cannot perform basic literature review and project planning, let alone find patterns in papers that human scientists can’t. The emperor has no clothes.

But I don’t think that’s necessarily a problem that can’t be solved. LLM and so on are ultimately simply statistical analysis, and if you refine it and train it enough, it can absolutely summarise at least one paper at the moment. Google’s Notebook LM is already capable of it, I just don’t think it can quite pull off many of them yet. But the current state of LLMs is not that far off.

I agree with AIs being way over hyped and also just having a general dislike for them due to the way they’re being used, the people who gush over them, and the surrounding culture. But I don’t think that means we should simply ignore reality altogether. The LLMs from 2 or even 1 year ago are not even comparable to the ones today, and that trend will probably keep going that way for a while. The main issue lies with the ethics of training, copyright, and of course, the replacement of labor in exchange of what amounts to simply a cool tool.

AI. The Boat of big tech.

A giant pit you throw money into and set it on fire. I guess its a worthwhile investment for Thiel and his gaggle of fascist technocrats. So they can use it to control everyone.

Hell, openai didn’t turn a profit on users paying $2000 a month.

Simple evil solution.

Make it part of tuition.

Devil blushes

“Employees will use AI to do their jobs!” AI enthusiasts don’t seem to grasp that if AI can do your job, you’re not going to have a job any more.

Thats why farmers don’t exist, steel plows took em all out, similar for painters since cameras were invented.

We have a lot fewer farmers now than we had had in the 19th century. Which is overall a good thing, because traditional farm labor sucked and had low productivity, but the people who are pushing LLMs don’t want to increase the population’s wealth, they want to impoverish it (kinda like what happened in the 19th century after farm labor productivity increased massively). And in contrast to increasing farm labor productivity, LLMs don’t even really do much that we really need, it’s practically useless for a lot of what it’s currently used for. LLMs are bullshit generators.

Though I guess it does shine a light on how many jobs weren’t really doing anything useful in the first place. Bullshit generators for bullshit jobs.

There have been scarier graphs in the 2020s 🙄

deleted by creator

Climate change wants to have a chat with you

deleted by creator

Climate change is scary, but not as scary as the idea of having a high probability of getting a disease right now that might kill me or leave me debilitated with ME/CF.

deleted by creator

I don’t think you’re appreciating just how many people died in the pandemic.

the sooner the ai bubble bursts and takes down big tech with it the better

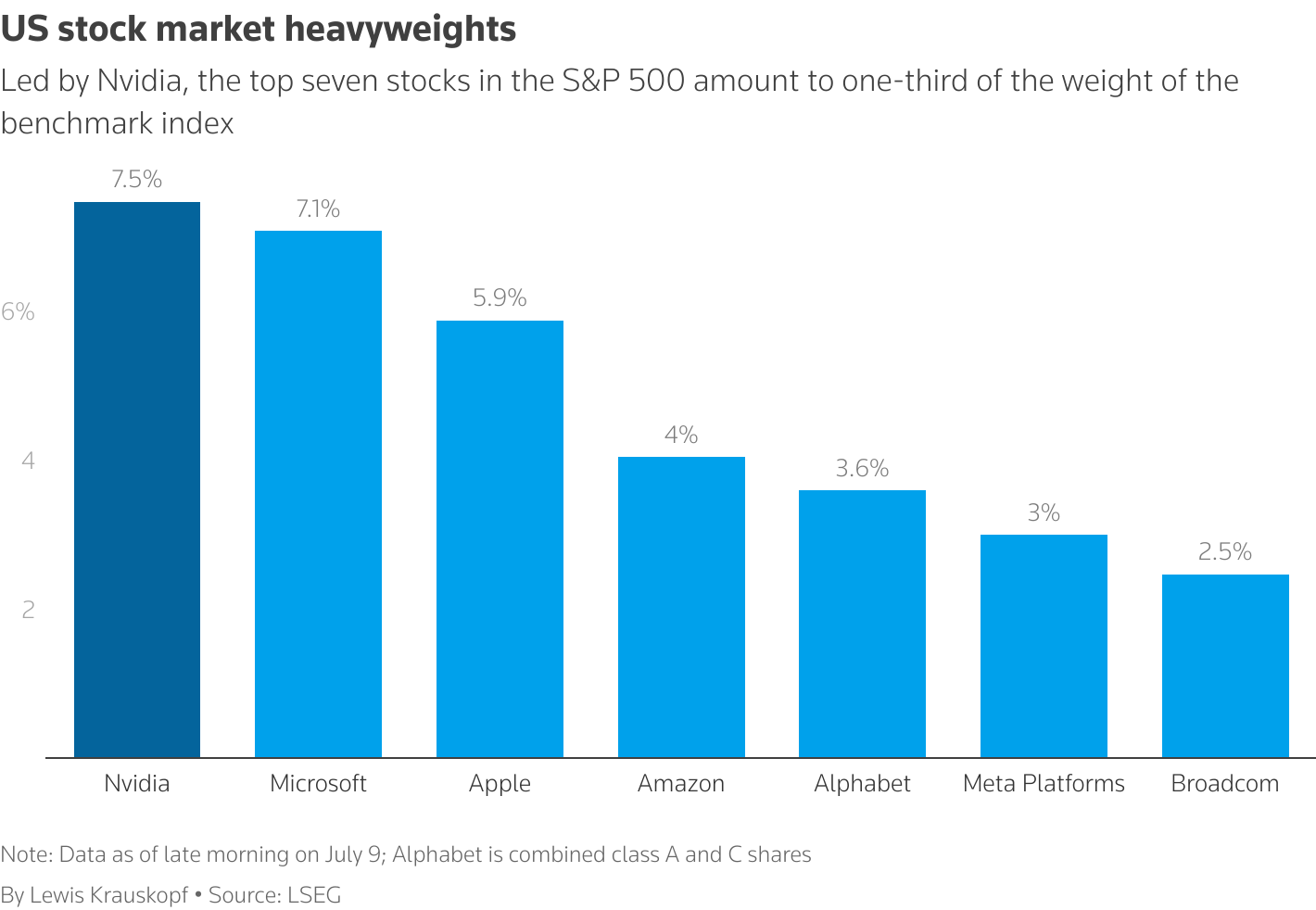

Honestly the AI bubble is going to take the entire stock market with it. Over a quarter of the S&P 500 (an index of the 500 and something most valuable companies on the US Stock Market) is made up of tech companies directly investing in the AI bubble, and most individuals and funds these days invest into indexes rather than individual stocks so when a single overvalued market sector making up over a quarter of the market loses most of its value, every stock portfolio is going to lose a shitload of value.

If the AI bubble pops, people’s retirement will also probably disappear tbh. Lotta money right now in IRAs and 401ks

Yeah it’ll be bad, but realistically it’ll be like every other major economic calamity. Your accounts lose a ton of value very rapidly, but as long as the money keeps going in (or as long as you have a small enough withdrawal rate if you’re already retired) ultimately it’ll eventually bounce back and then some in the recovery, because when stocks are down that’s the time to pile more money in (buy low sell high)

It depends on the person’s age group, if they just hit retirement and the stock market collapses, then they’d feel the pain the most. It could mean delaying their retirement by years.

Younger people on the other hand usually bounce back, unless of course there are other circumstances like war.

It’s going to happen and we cannot keep subsidizing this shit.

Like, sorry about your imaginary deserves-to-live points, im sure your dumb game is very fucked by this, but that money’s on the roulette table and the wheel is spinning. We got productive capacity electricity ghg’s and fucking water to salvage.

._. I never said I supported the stock market, just that the stock market collapse will affect a lot of people.

If I had it my way, companies should never be public. Lots of current day issues are because public companies act upon shareholder whims and thus cause the worst of modern day societies

I’d love to have pensions also be reintroduced in companies, but they’ve essentially been all phased out by lobbying for 401ks and IRAs instead.

pensions

Preferable in almost every way, but this vulnerability is shared, right?

Depends on how the pension is set up but yes.

Fair. But typically.

Maybe we shoukd just provide for each other as a society?

I’m hoping it goes rogue. So rogue that AI becomes a boogieman that makes people shit their pants at its mention like in Cyberpunk.

It is a very advanced autocomplete, not a proper AI.

Would be funny if it went rogue while its still kinda dumb. Cleaning out a worldwide infestation of mostly harmless semi-self-aware software would surely teach everyone a lesson.

Best possible future

But knowing us, we’d throw that lead by trying to use better AI to fix the AI problem.

What do you mean “goes rogue”? Like, that the program gets corrupted?

Think modern day Morris Worm.

someone sets up a deathbot with an ollama instance running inside, and the text output is parsed into commands

on the outside it just looks like a deathbot killing everyone, but on the inside it’s constantly spewing out inane bullshit while directing the robot to kill everyone.

It will take everything with it. We’re betting the future of the whole global economy on a homework machine.

This dream that we’ll wake up tomorrow and AI will be a profitable product is the only thing saving us from the full fallout of the tariffs.

It’s so much worse than most people could understand from a chart.

June 6th is not when school’s out over here.

So is the hypothesis that OpenAI’s usage is heavily regionally skewed to… wherever classes end that date? I’m guessing US, because that’s what I guess when somebody forgets there’s a planet attached to their country.

Schools in the US don’t all follow the same schedule; it varies drastically state to state, and can even vary by district within any given state.

Even worse for that hypothesis, then. Assuming the poster was from the US in the first place.

Exactly. Some universities end their spring terms in early May. Some in early June. K12 schools tend to more consistently end their years in early June, but that’s still spread out over a few weeks.

Yeah that kind of coordination coming from the end of the school year doesn’t make sense. Zooming out a bit it looks like there was just a spike in May 2025. It was all useage of a particular model, OpenAI’s GPT-4o-mini, which barely registers outside of these short periods of high use in March and then May of 2025. I don’t really know what a ‘token’ is so maybe it’s not a 1:1 comparison when useage shifts between different models? Or the data’s bad? Or some particular project used that model a large amount in those specific months?

A token is the “word” equivalent as far as AI is concerned. It’s just not a full word, it’s whatever unit of meaning the neural network has decided makes sense (so “ish” can be a token by itself, for instance). Point is, tokens processed is just a proxy for “amount of text the thing spat out”.

At a glance, and I haven’t looked into it, this looks like a product launch or a product getting replaced or removed, maybe putting something free behind a paywall or whatever. Definitely not the school year ending in a particular place. It’s pretty clearly misinfo, I’d just have to do more homework to figure out what kind than I’m willing to do for this purpose, but your assessment definitely makes a ton more sense than the OP.

I’m guessing any college that wants to hop on trends has an LLM class that chugs through tokens.

Is that relevant to the June date? Universities aren’t any more internationally consistent than other tiers of education, to my knowledge.

Holy shit, you can even see the weekends.

I wonder if the weekend dip is Friday and Saturday with an uptick on Sunday night when students try to do all their weekend homework?

The dips match up with Saturday and Sunday, since June 6th was a Friday

The weekend dip would also apply to all the working people using AI

working people

No, some of them have actual jobs.

If you think that’s the scariest graph in the 2020s you have a shit memory of what happened at the beginning of this decade…

I’d argue the scariest graphs are about the climate, but few people seem to care anymore. We are already at 1.5°C global warming. Coastal regions around the world are almost definitely going under by 2100.

cries in dutch

If anyone can out-engineer nature, then it’s you. Please help us out in Sleeswijk Holstein!

The scariest thing about this sentence is realizing we’re already halfway to the next decade

What the hell has happened this decade so far, anyway? 🤦🏻♀️ Feels like we made little progress and just took giant steps backwards everywhere.

I can think of a couple of good things!

We proved the efficacy of mrna vaccines and deployed them at an unprecedented scale against a novel virus that had us all locked in our house. If it hadn’t worked, our governments were pinches this close to sacrificing us all for the

greater goodeconomy anyway so realistically these vaccines probably saved billions of lives.We’ve also deployed a huge amount of solar energy and started replacing combustion engines with electric ones on a huge scale in some countries.

There has also been a lot of bad stuff though…

The other J6

For a brief moment, I felt hope.

deleted by creator

Trusting unsourced graphs is as stupid as trusting AI.

“June 6 is when school gets out in… uh… all the places where the children are using this AI thingy. Right.”

deleted by creator

His is the scariest chart of the 2020s? Not the alarming warming spikes or the more powerful natural disasters? Who gives a shit if kids are cheating in school if they world they’re going to inhabit one day won’t leave them time for book learnin because they’re too busy surviving.

Tbf, part of why shit it so bad is because people are uneducated. If they can’t think for themselves then they’ll just believe whatever bullshit Fox News or the algorithm feeds them without critically thinking about it at all

And we’ve already seen that a company CAN change the “behaviour” of an LLM with a flick of a switch (“MechaHitler”, anyone?), so imagine people being too lazy to research/learn, and a popular LLM being run by malicious actors.

We haven’t even seen a tenth of how much damage poor education can do to the world.

I definitely get it. The chart is bad. I just meant more like…we are so close to that point of no return on the climate. If we haven’t already surpassed it. The damage is done. It’s game over. How bad is it going to get is the question now.

hahahahahaha holy shit it’s not even a scaled graph jesus christ

Yeah without doing any research a consistent 2/3 drop is actually pretty damn compelling. Saving this to check back when US schools restart in a month

Is there a source for the chart? How do we know it’s official data?

https://x.com/mazeincoding/status/1952787361768587741

Here’s the link to the original tweet. A lot of people saying 1 big client probably switched models. As all the other models rose during the same time period.

cant wait for my surgeon to ask chat gpt where the bladder is

“What do ‘left’ and ‘right’ mean again?”

‘Chat GPT? Explain to me like I’m 5 how to remove an inflamed appendix, but use only Roblox terminology… Also, say it like Mr. Krabs from SpongeBob.’

You must be laughing but I work with medical professionals and they do use ChatGPT all the time. Maybe not (yet) for trivial stuff like what you said, but basically for everything else. My boss, a senior physician, basically tells everyone that the end of medical education is near and proposes some form of human-assisted AI in medical practice.

@grok is this the diaphragm? insert pic of cut open patient

When parents fail to reinforce the value of education at a young age, this is the result. I know people personally who openly admitted to using chatgpt during finals at college, and they aren’t bad people, but they don’t see the value in the serious mastery of education, and they weren’t exactly model students before chatGPT came around either.

The education system is fundamentally bullshit and they’re not wrong to try to get out of doing the make work. I just encourage them to do self-education outside of school because at least then no one’s going to make you write some dumb essay. If Chad GPT existed when I was in school you bet your butt I would have used it and retrospect I would not have been unjustified in doing so

The education system is fundamentally bullshit and they’re not wrong to try to get out of doing the make work.

Just because you failed to see the value of the writings you were assigned does not mean those writings were without value.

I was a smart and hard working student and the system chewed me up and spit me out. I learned more outside of school than in and I regret giving it as much effort as I did, in retrospect.

https://www.self-directed.org/tp/spaces-of-learning/ I’d rather try something like this

School as in post-secondary? Otherwise June 6 seems early

Depends where you live. Where I am at, most schools end at the end of May, but then start around the beginning of August

Yea the official end of school was like June 18th when I was in high school, but there was seldom any real work assigned the last couple weeks. Advanced Placement classes have their exams in May then nothing after.

I just checked the calendar and this year June 6th was a Friday which seems like a likely day for the last assignments of the year to be due.

that’s really late where I live. May 28th is when mine got out

Agreed. My last day of HS was June 12, standard school year schedule. 2nd week of August to 2nd week of June.

In my district in Arkansas school ended May 28th

same in florida

That’s…not how school schedules work.

oh are we writing useless comments, I’ll join

When did your local school district let out for summer? And your local college/university?

I was joining the unconstrictive criticism, nothing to do with the content of your comment.

I can’t independently find this chart, does openai publish their daily usage data?

deleted by creator